Currently, the ongoing research is broadly based on the topics enlisted below:

- Machine & Deep learning and Artificial Intelligence

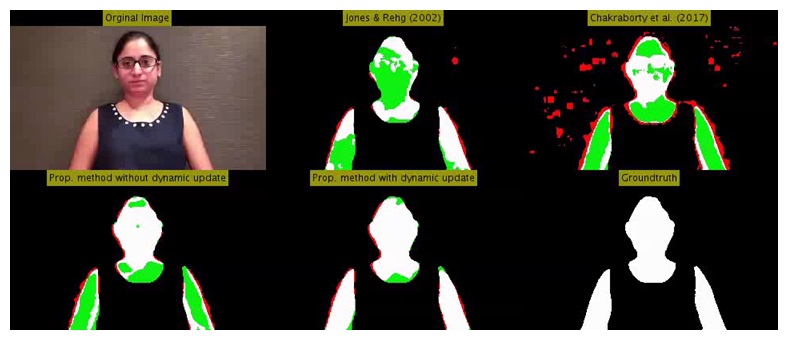

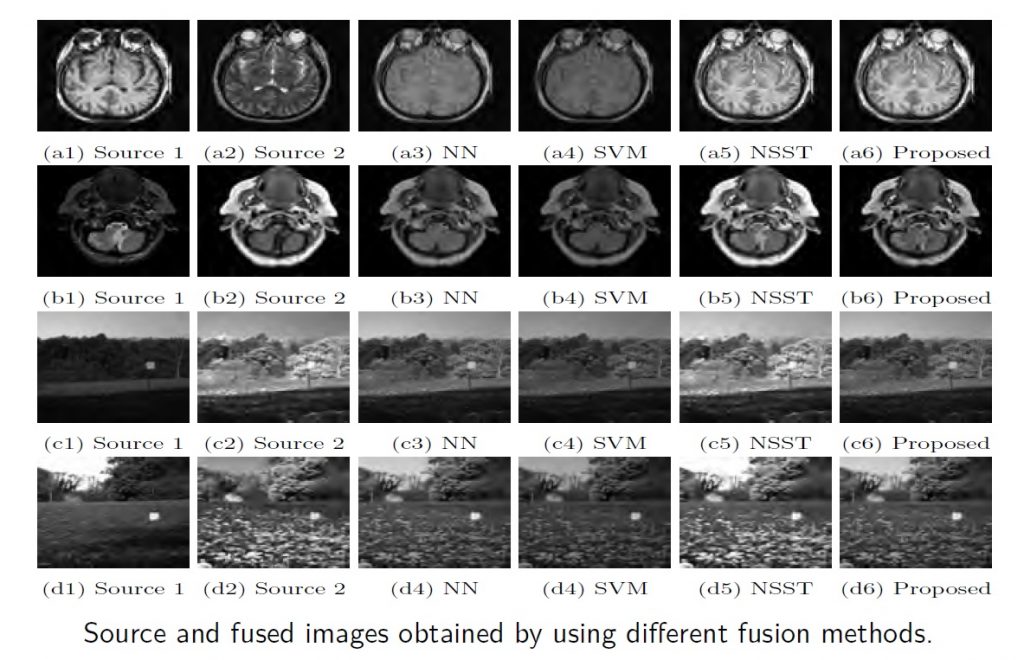

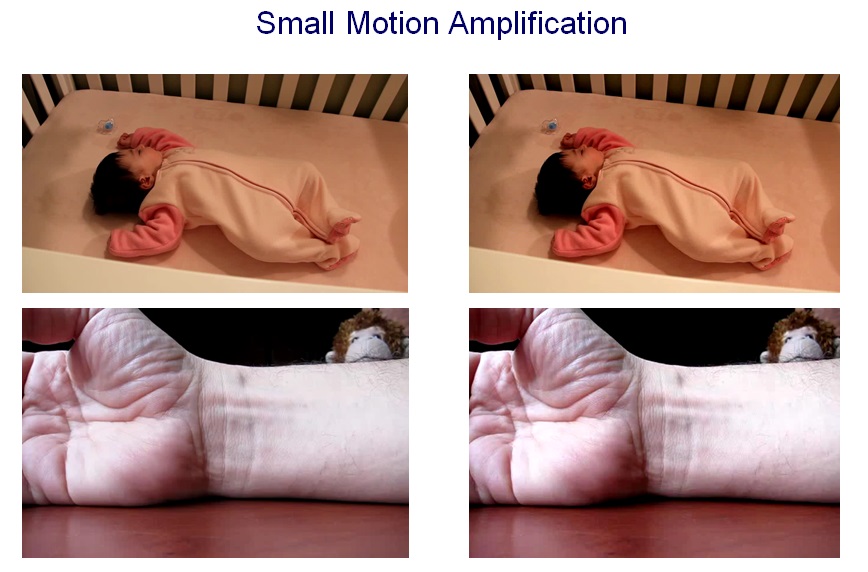

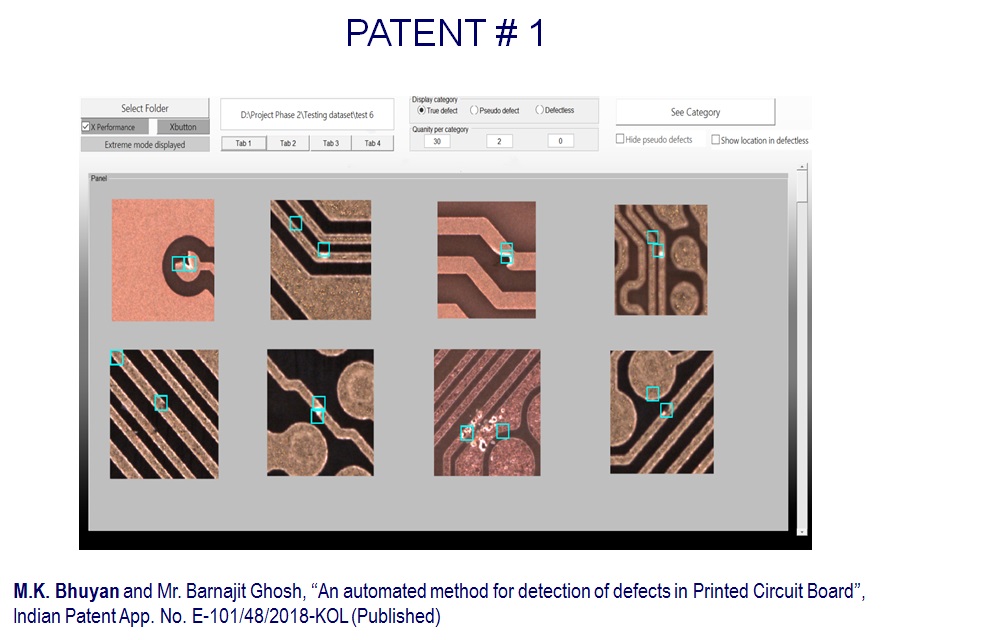

- Image & Video Processing

- Computer Vision

- Biometrics & Human Computer Interactions (HCI)

- Virtual Reality & Augmented Reality.

- Biomedical Signal Processing.

Research Interests:

Area of specialization: Image/Video Processing, Computer Vision, Pattern Recognition, Biometrics, Machine Learning, Deep Learning, Digital Signal Processing, Human Computer Interaction, AR & VR.

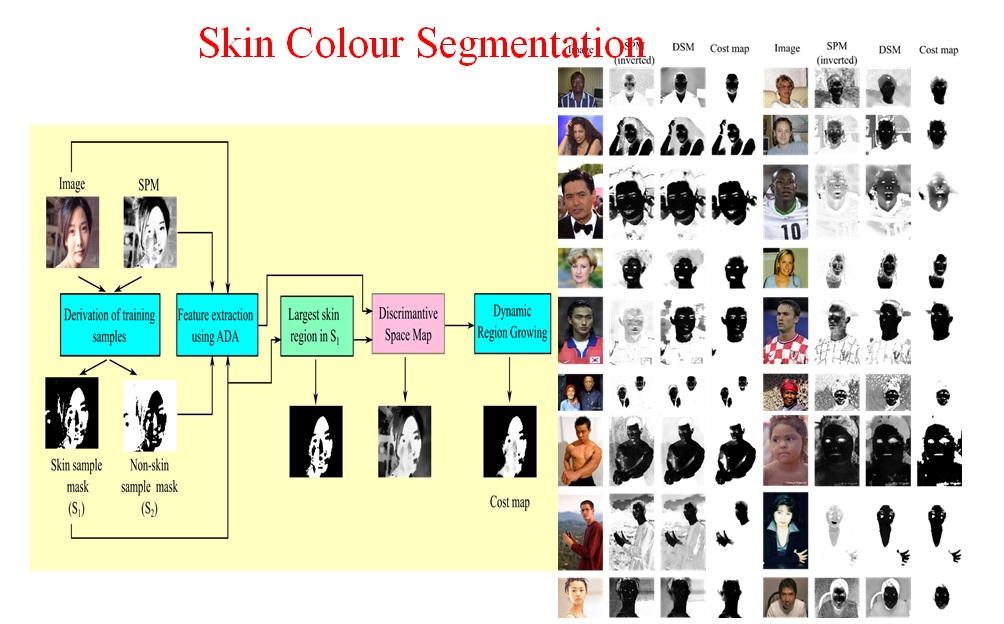

Topics of research: Human computer interaction, gesture recognition, virtual reality, robotic vision, video surveillance, bio-medical image analysis, bio-medical signal processing, image segmentation, application of fuzzy logic and/or neural networks in image understanding, machine learning, biometrics, deep learning methods, etc.

National Award for Best Applied Research and Innovation:

I was awarded the National Award for “Best Applied Research/Technological Innovation Aimed at Improving the Life of Persons with Disabilities” by Government of India. The award was conferred by Honorable President of India at Vigyan Bhawan, New Delhi on 6th February, 2013.

https://www.youtube.com/watch?v=7e65nRCMMMM

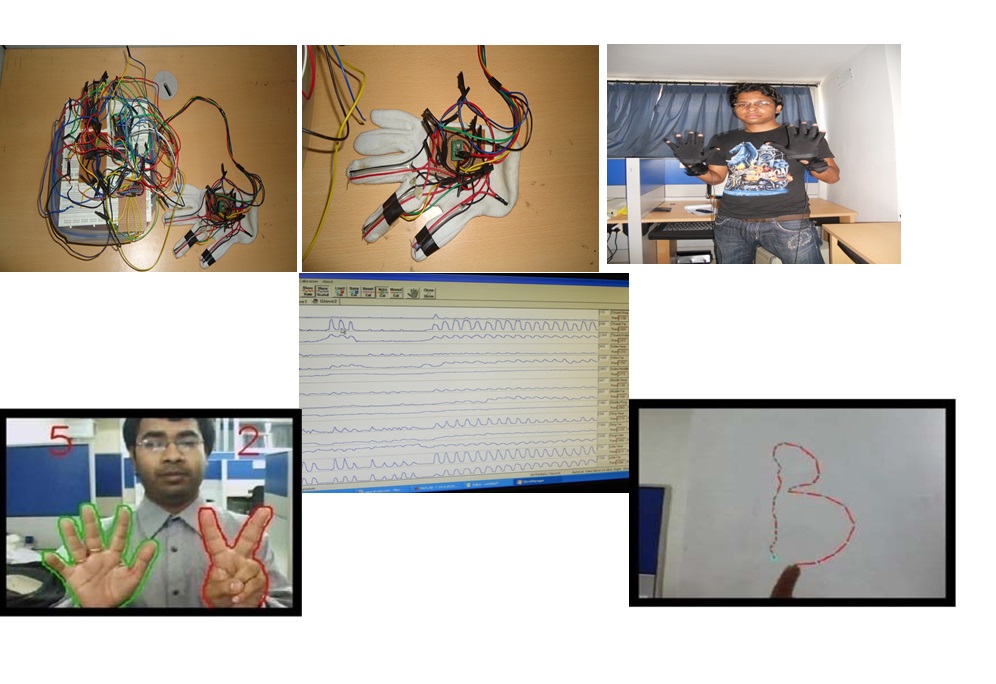

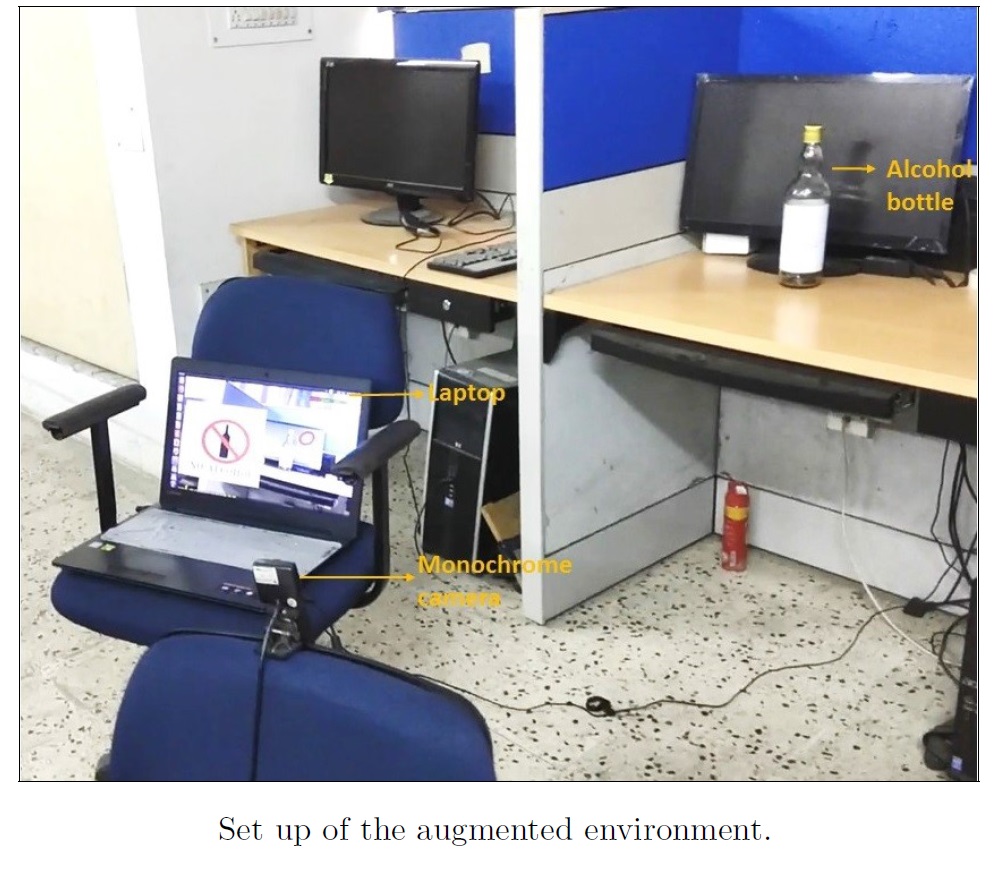

I am currently engaged in extensive research in the area of Human Computer Interactions aiming at Indian Sign Language Recognition and Education. The award is in recognition of my contribution in this specific research domain. I have been doing this research in this field for the last 15 years including my research in University of Queensland, Australia and IIT Roorkee, India. This innovation will improve the academic and communicative environment of hearing impaired. A part of my research is currently financed by MHRD, Government of India under National Mission for Education through Information and Communication Technology.

Past Research:

I was researching people tracking in real-world surveillance video as part of the project on Intelligent CCTV surveillance. This research has strong international linkages and interest as security is an international problem not just an Indian problem. My research was focused on human and object tracking in real time for video surveillance. The project was originally funded by the Department of Prime Minister and Cabinet, Government of Austarlia. I was a research member of the Sensor Group (National ICT, Australia) in the Smart Applications for Emergencies (SAFE) project under safeguarding Australia program, Govt. of Australia. Moreover, I was also a research member of the Security and Surveillance group of school of ITEE, University of Queensland, Australia.

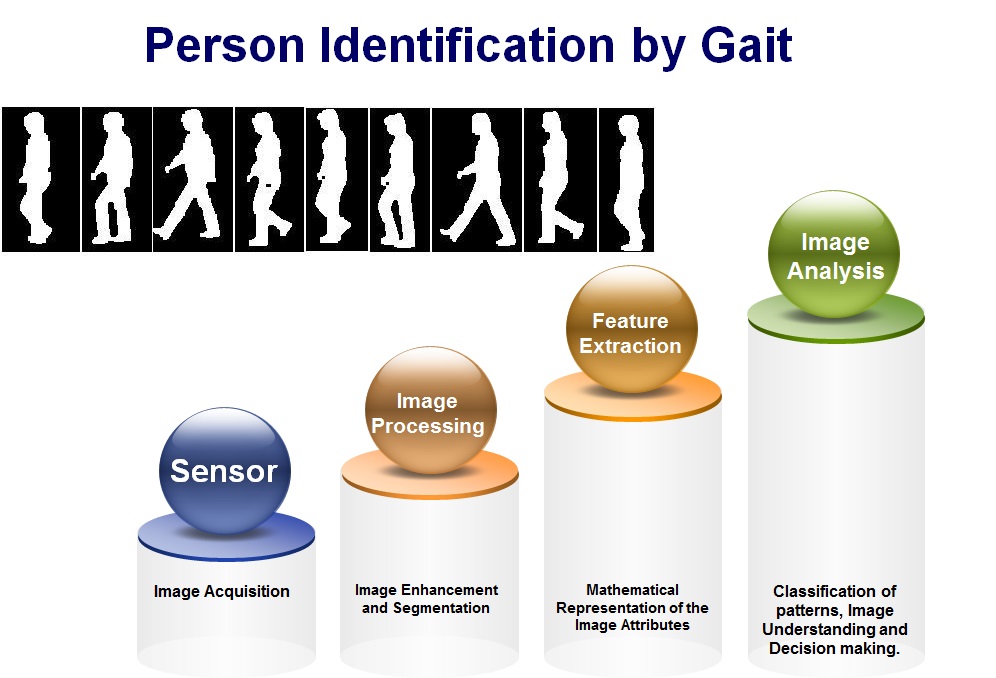

For another sponsored project, I was doing extensive research on gait and hand gesture recognition for Indian Sign Language Education/Recognition and Human Computer Interaction. The project was funded by MHRD, Government of India.

Again, I developed course materials on “Computer Vision and Applications” under the sponsored project on “Developing suitable pedagogical methods for various classes, intellectual calibers and research in e-Learning”, which was funded by MHRD, Government of India under National Mission for Education through ICT.

Research collaboration with University of Purdue, USA, Chubu University Japan, UBC, Canada and University of Queensland, Australia. MOU with Chubu University Japan and collaboration with INSPEC, Japan. Research Publications with Prof. Yuji Iwahori, Prof. Brain C. Lovell, Prof. Karl F. MacDorman and Robert J. Woodham.

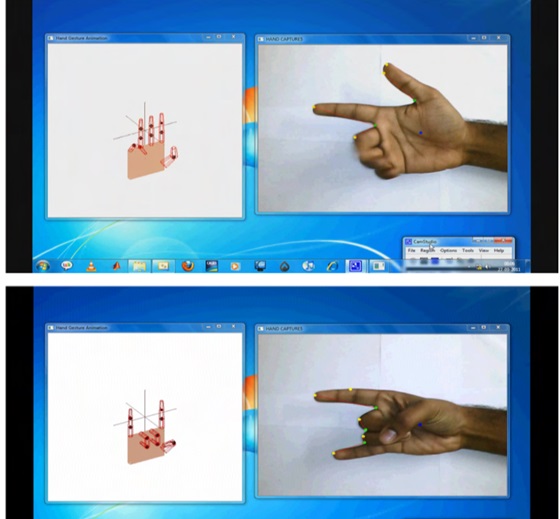

PhD Thesis Title: Vision based Dynamic Hand Gesture Recognition for Human Computer Interactions.

Summary:

One very interesting field of research in Pattern Recognition that has gained much attention in recent times is Gesture Recognition. Hand gesture recognition from visual images finds applications in areas like human computer interaction, machine vision, virtual reality and so on. Gesture recognition may be accomplished either by capturing gestural motion using sensor devices or by analyzing gesture images/videos using computer vision techniques. Gesture recognition plays an important role in several applications like sign language translation, human-computer interaction (HCI) and so on. In the context of human-machine nonverbal communication, visual interface becomes important in establishing communication via understanding human intention from their behaviour, such as the facial expressions, hand gestures, etc. With the increasing interest in HCI, there has been rapid growth in studies related to vision-based gesture recognition in recent years. However, there are still many shortcomings with the algorithms developed so far. This has motivated the research in this field.

In our research work, we attempted to recognize wide classes of dynamic hand gestures having different spatio-temporal and motion characteristics. Simultaneously, we also recognize continuous streams of gestures by detecting individual gesture boundaries with the help of co-articulated hand strokes. In general, there are three types of dynamic hand gestures – (1) Gestures with local motion only where only the fingers and the palm move without any movement of the whole hand/arm, (2) Gestures with global motion only where the hand as a whole moves differently in the 3D space to make different gestures, and (3) Gestures with both local and global motions where the fingers and palm make different hand poses while moving the arm in space. In our method, we use the concept of object-based video abstraction for segmenting the frames into video object planes (VOPs) with each VOP corresponding to one neuralantically meaningful hand position. Subsequently, we represent a particular gesture as a sequence of key frames that correspond to significantly different VOPs in the video sequence. The core idea of our proposed representation is that redundant frames of the gesture video sequence contributes only to the duration of a gesture pose and hence discarded for computational efficiency. Following this, a gesture with local motion only is modelled as a sequence of states, each state corresponding to the hand shape and pose in each of the key frames, thereby forming a finite state machine (FSM) representation for the gesture. Recognition is performed on the basis of FSM matching. For gestures with global motion, gesture trajectories are obtained from these key frames and are used for recognition. To accomplish trajectory guided recognition successfully, we propose to use some basic trajectory features like trajectory length, trajectory shape and the speed of gesticulation. For continuous stream of gestures, hand motion and hand pause features are used to isolate component gestures thereby getting rid of the co-articulation effect. Experimental results show that our proposed gesture recognition module is capable of recognizing different types of hand gestures with an overall accuracy of about 95%. Thus, our proposed gesture recognizer proves to be useful in HCI based systems.

Brief description of Post Ph.D. research:

|

Institute / Laboratory |

Activity |

|

School of Information Technology and Electrical Engineering, University of Queensland, Brisbane Qld 4072, Australia. |

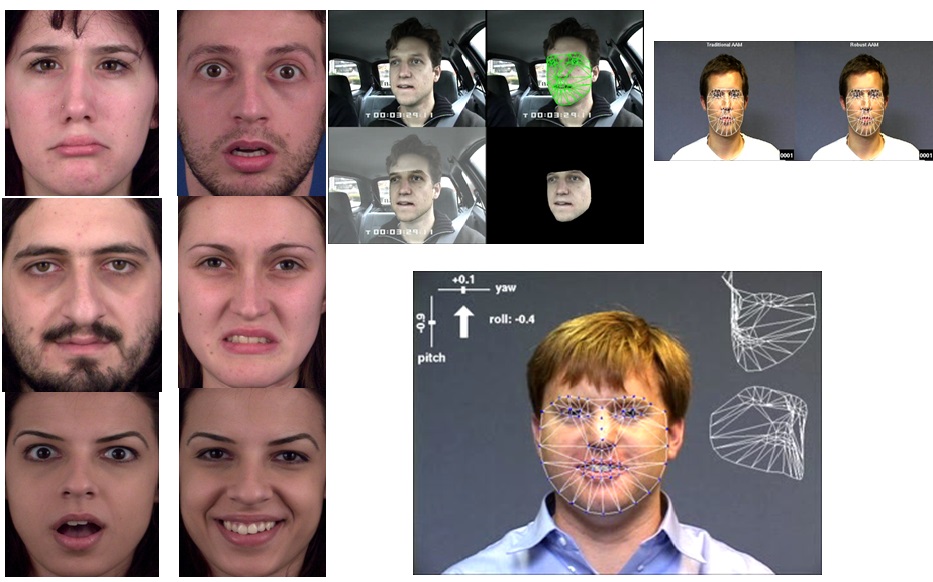

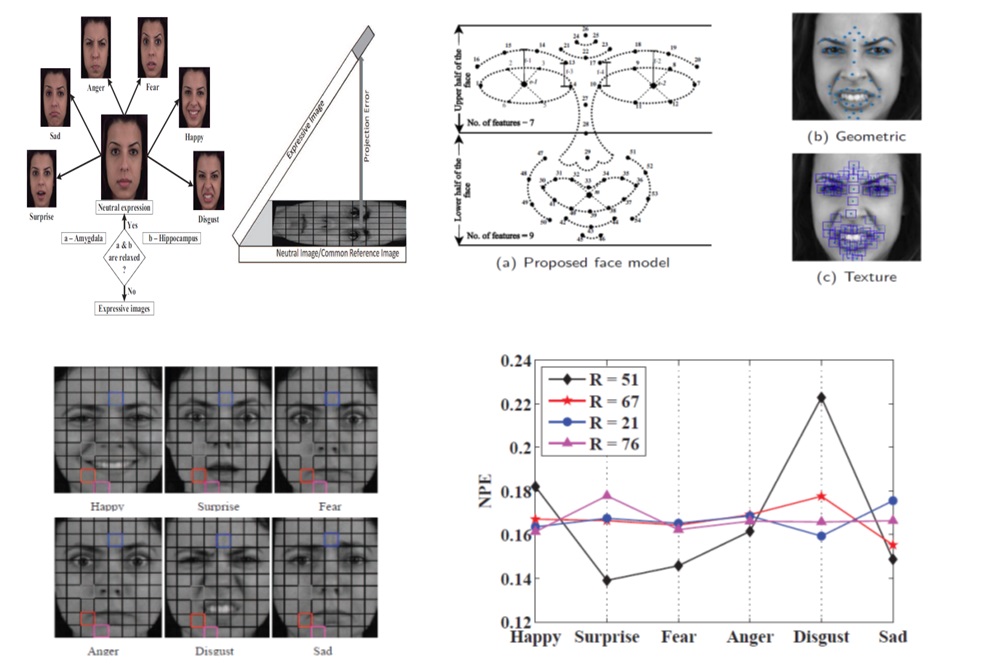

Research member of the Security and Surveillance group of school of ITEE, University of Queensland. Research was primarily focused on face and gait recognition for Human Computer Interaction. |

|

National ICT, Australia, Queensland Research Laboratory, Adelaide Street, Brisbane QLD 4000, Australia. |

Research member of the Sensor Group in the Smart Applications For Emergencies (SAFE) project under safeguarding Australia program, Govt. of Australia. My research was focused on human and object tracking in real time for video surveillance. The research was funded by the Department of Prime Minister and Cabinet, Government of Australia. |

In my post doc research, I primarily focused my research on people and object tracking for video surveillance applications. The problem of visual inspection of outdoor environments (e.g., airports, railway stations, roads, etc.) has received growing attention in recent times. My research work is a component of a larger project to apply intelligent Closed-Circuit Television (CCTV) to enhance counter-terrorism capability for the protection of mass transport systems. The purpose of intelligent surveillance systems is to automatically perform surveillance tasks by applying cameras in the place of human eyes. Recently, with the development of video hardware such as digital cameras, the video surveillance system is becoming more widely applied and is attracting more researchers to develop fast and robust algorithms. During the period of my research in Australia, I proposed pedestrian classification and tracking system that is able to track and label multiple people in an outdoor environment such as a railway station. I proposed an approach that combines blob matching with particle filtering to track multiple people in the scene. The proposed method selects the good features of blob matching and particle filtering for tracking. In my proposed method, the system can easily recognize persons even when they are partially occluded by each other and can track correctly after merging. In addition, a novel appearance model derived from the colour information both from the moving regions and the original input colour image is proposed to track people in the event of bad foreground extraction. Besides, the proposed appearance model also includes spatial information of the human body in both vertical and horizon direction, making location more accurate. In the object classification stage, hierarchical Chamfer matching combined with the particle filter is applied to classify commuters in the railway station into several classes. Based on the single camera tracking, I extend my work to multiple cameras tracking by two-level tracking, which are image level tracking and particle filter-based ground level tracking. Additionally, a novel method to extract cars from the regions including shadow area based on shape and colour information is proposed. Chamfer template matching score and non-shadow region edge score are applied as the shape information while shadow confidence score (SCS) is used as colour information. In order to evaluate the performance of the proposed tracking approach, it is tested on the surveillance footage recently obtained at a railway station in Brisbane, Australia. It is to be mentioned that I was actively participating in this video data capturing process. Experimental results show that the proposed method is significantly better than the approaches where only the colour information is considered. Some of the experimental results on tracking and background modeling are shown below. Extensive discussions and the experimental evaluations of the proposed methods are available in my published/communicated papers.

Research Profile Summary

1. Vision-Based Hand Gesture & Sign Language Recognition

• Foundational contributions in FSM-based and trajectory-guided gesture recognition (2004–2012).

• Development of attention-based deep networks for hand segmentation, keypoint localization, gesture spotting, and continuous sign language recognition.

• Lightweight real-time models suitable for embedded and resource-constrained systems.

• Recent contribution (2026): EffiSign Network – Comprehensive approach for sign language recognition.

• Publications in leading IEEE, Springer, and Elsevier journals.

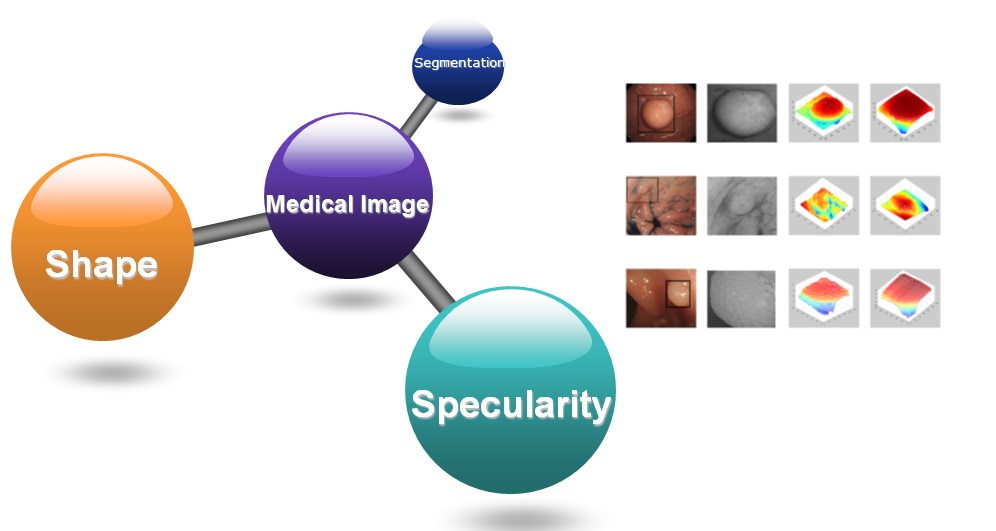

2. Medical Image Analysis & Healthcare AI

• Colonoscopic polyp detection, segmentation, and classification using deep learning frameworks.

• Semi-supervised GAN-based medical image classification techniques.

• MRI lymph node annotation from CT labels.

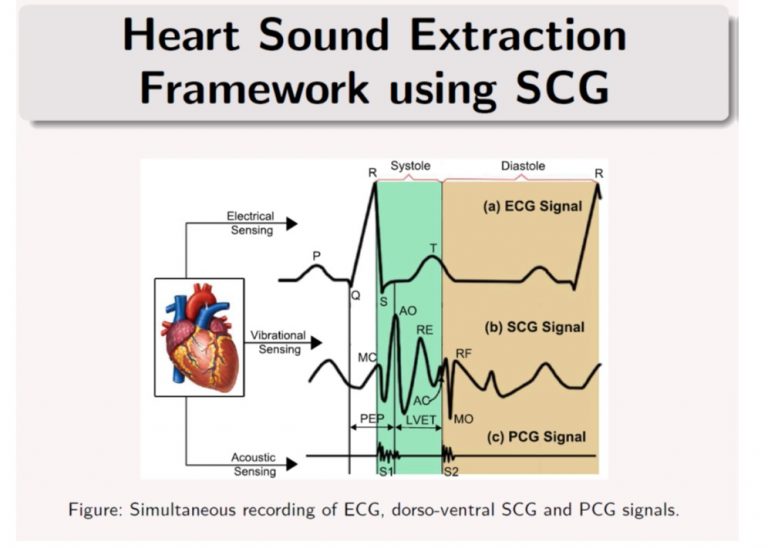

• Non-contact heart rate estimation, SCG-based cardiac analysis, and cuffless blood pressure estimation.

• Breast cancer classification using global and multiscale context fusion.

• Publications in Medical Image Analysis, Scientific Reports (Nature), IEEE JBHI, and Biomedical Signal Processing & Control.

3. Remote Sensing & Hyperspectral Image Super-Resolution

• Super-resolution of remote sensing images using attention and adversarial learning mechanisms.

• Transformer-based SAR image despeckling (IEEE TGRS, 2025).

• Hyperspectral image enhancement using spectral attention networks.

• Satellite image segmentation and roadway extraction using deep learning.

• Publications in IEEE TGRS, IEEE JSTARS, and IEEE Access.

4. Real-Time Semantic Segmentation & Edge AI

• Development of lightweight semantic segmentation networks (DMPNet, SLICENet, DECoDeNet, CTPNet).

• FPGA-based efficient deep learning architectures for edge deployment.

• Accuracy–efficiency trade-off optimization for autonomous driving applications.

• Publications in IEEE TCAS-I, TCAS-II, IEEE Embedded Systems Letters, and IEEE Transactions on Artificial Intelligence.

Publication Strength & Impact

• Over 300 peer-reviewed publications.

• Strong presence in top IEEE Transactions journals.

• Multiple publications in Nature group journals.

• Continuous research contributions spanning over 20 years.

• Extensive international collaborations including Japan, USA, and Middle East institutions.